A new feature Instagram quietly put into place could create problems for companies, but smart content and adjusting strategy can keep it from being an issue.

This month saw a new feature rolled out by Instagram called “Sensitive Content Control.” On the surface, it seems logical: if people don’t want to see problematic photographs or offensive material, they shouldn’t have to. And with increased pressure to combat the spread of false information and issues regarding the vaccine rollout, it makes sense.

However, with that new feature comes adjustments to an algorithm that’s already hard to master. With those adjustments comes new aspects companies have to think about before posting. This is especially the case for those advocating in political areas or non-profit industries dealing with mental health and other areas that could incur highly emotional responses.

When dealing with advocacy for areas such as mental health and emotional support systems, that can get flagged by this new control system, it’s not ideal. There was the ability for companies to use certain hashtags for discovery and outreach to those seeking help in categories such as #mentalhealth or #depression. However, some hashtags are now seeing more content hidden, such as the hashtags #brain and #thought, because of users taking it over in a negative manner.

The intent is to combat increasing misinformation on social media as well as reduce the risk of exposing minors to harmful or dangerous content. Which is admirable in its intent. However, in execution its application is already causing issues.

Artists are reporting their posts becoming difficult, if not impossible, to find through search features using hashtags if their art is determined to include “sensitive content,” even if the posts seem to be within normal parameters and guidelines. The net being cast is incredibly wide, and therefore can cause issues with visibility of content that is completely within social norms on the platform.

Political advocates, even those pushing for reform and doing good, are seeing their content, sometimes even their accounts, becoming increasingly harder to spot organically. This is where the problem of a wide-spread update controlled by data and analytics without human inspection becomes a problem. Anything political, good or bad, becomes invisible. And as a private company, that’s a decision Instagram is allowed to make. The question then becomes, when does a solution become part of a different problem?

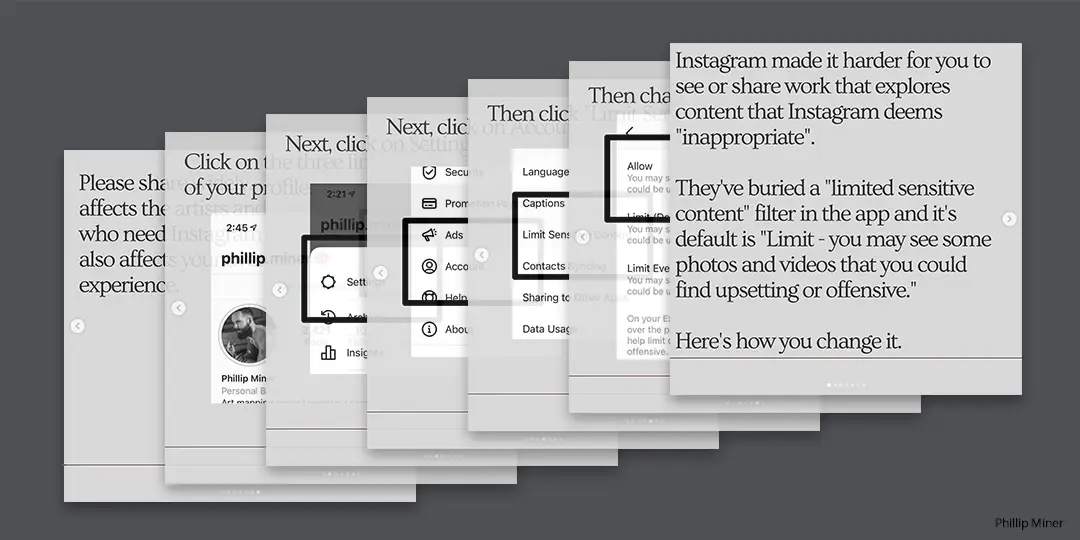

In addition, the ability to adjust the settings to allow sensitive content is hard to find, and for minors the option isn’t there. In the Settings area, users over 18 can access the “Limit Sensitive Content” area, and choose “Allow, Limit (Default), or Limit Even More.” The last option reduces the number of photos and videos that could be “upsetting or offensive” according to Instagram’s standards. Users under 18 cannot elect the “Allow” feature to see photos or videos that could be upsetting or offensive. They can only Limit, or Limit Even More.

Where this becomes a problem for industries like mental health organizations or other advocates is their content could get marked by Instagram through no fault of the account manager. Mental health is a sensitive subject, and, with this new feature, a human will not be discerning between content that may upset people versus content that may provide tools for assistance and beneficial resources.

What happens if a minor is in crisis and using a tool like Instagram to search for coping mechanisms or local resources regarding mental health help? The algorithm may consider mental and emotional crisis information sensitive and make it invisible as a result to minors or if they have limited sensitive content.

There could be an argument made that social media is not the place for it. But companies and organizations have to go where their audience is. As a result, if the platform to reach their audience in the best way is censoring the content, it will become a silent effort that may miss someone they would have otherwise reached.

Many don’t even know this new feature was rolled out by Instagram and put in place by the Facebook-owned company as the account default. This is why photographer Phillip Miner’s Instagram post of a guide on how people can combat this feature saw massive engagement and coverage on news outlets in only a few days.

So what do businesses need to do to avoid disappearing in search results or getting flagged for sensitive content?

This is where the lack of transparency by Facebook and Instagram becomes frustrating for many. However, there are steps brands and companies can take.

- Review Instagram’s Policies and Reporting standards, this includes Community Guidelines. While not everything you need to know, it’s a great start. It’s also not glamorous work, by any means, but knowing what will cause your account to get flagged for content is imperative, especially if you are an advocacy group and find success on the platform. Here is their post about the Sensitive Content Control, specifically.

- Avoid hashtags that have caused a lot of the fuss to begin with. Right now, the big focus for Facebook and Instagram is on the spread of misinformation. As a result, if you are creating posts that are resources for coping with mental health issues regarding Covid-19 or strategies for finding help, avoid using the hashtag #Covid19. Almost everyone spreading lies and misinformation is using this hashtag, so now it’s almost a guarantee you’ll have issues with the post’s visibility.

- Does that mean you are part of the problem too? Absolutely not. However, without a viable solution to have humans spot check every post (which is millions per hour), Instagram has to put algorithms and bots in play to scan content and automatically flag areas for review or tag them as sensitive content. As a result, unless it’s vital for you to use the hashtag, don’t. Find other popular, relevant hashtags related to your audience and your content.

- Review the hashtags that are getting consistently flagged by Instagram. Postify constantly updates the list of 2021 Hashtags being banned on Instagram here. Their list is incredibly helpful because it reviews popular hashtags with “currently hidden” content, which means they are now in a category classified as sensitive content by default.

- A final small step is to share instructions on how your followers can adjust their settings. Be sure to explain why. If you perform political advocacy, explain that the subject matter you’re posting may be flagged because of the intense atmosphere of the current situation. Encourage sharing. If you are a mental health resource for a community, share the instructions with your followers while explaining that Instagram wants to help with insensitive content; however, it can’t scan every post that comes through. As a result, allowing sensitive content will make sure your posts aren’t buried if they love and admire the helpful messages and support you provide, even if some outside of your followers and organization may deem the subject area itself a touchy area content-wise.

Reach out!

If you have more questions about digital and social media best practices, please feel free to reach out to us. We’re always happy to chat and see how our experts can help. If you have additional resources that you think would be good to review or send to others, please reach out and let us know.